It was raining near dusk, and the drivers turned on the headlights. Soon, the slippery road reflected the headlights, street lights, neon lights in the mall, and the sun in the west. The road looked like a kaleidoscope of sparkles. The natural light is weakening, and the night lights are not so bright, the road looks vague.

This article refers to the address: http://

The wiper has not been working for a long time, you feel the front is as dark as mud, and the shadow on the roadside seems to be in the middle of the road. In the hustle and bustle, you realize that there is a car with no headlights in the blind spot of the car. You are driving the turn signal and groping for the middle lane. Suddenly, you think of an advanced active safety system in your car.

Three possible outcomes in the near future

This story may have three different outcomes, technology wins, people beat the machine, and accidents may occur. It may be like this: before you realize what is going to happen, the turn signal has started to flash, your car is slightly accelerating in the middle lane, then gently braking, avoiding pedestrians that are not visible nearby, and reminding the back Vehicles.

It may also be like this: When the wheel turns, you feel that the car's lane keeping function is working and pulling the car back to the original lane. However, you still continue, with the turn signals, safely wired, and ignoring the harsh horns of other vehicles. At this time, pedestrians emerged from the roadside, and the front windshield front-view display system did not let you see this person in time. Fortunately, you have escaped from him.

Or, maybe: When you turn, you hear an unfamiliar but very harsh alarm. When you haven't figured out what's going on, a car rushes out of the blind spot—now, you have no choice but to stop the panic. The anti-lock brake function works on the slippery road, causing you to panic, and the car finally stops next to the frightened pedestrian. In extreme horror, you glanced at the dashboard and learned the reason for the crazy alarm—the Advanced Assisted Driving System (ADAS) was turned off.

This is the result of three completely different scenarios for the same scene. This stems from three completely different ADAS. To illustrate these differences and understand their impact, we will examine the implementation of ADAS in detail. At this year's Design Automation Conference, a theme discussion and an open forum were held, which was well described.

Starting from the sensor

The ADAS system is a logic pipeline, and it is best to understand that it is best to start with a wide input range. James Buczkowski, research director and researcher at Ford Automotive Electronics and Electrical Systems, said: "With the development of automation, the challenge is that using only one type of sensor is not enough."

In the open forum, the host is Crian's editor-in-chief Brian Fuller, who also asked experts to discuss the ADAS sensor. According to Edward Ayrapetian, chief design engineer for sensor system supplier Nuvation, “We currently use many types of sensors. In general, you will see a combination of lidar, normal radar and high-resolution video cameras. But with images Processing algorithm improvements, everything is constantly changing."

Ayrapetian explained that each type of sensor has its advantages and disadvantages, and other experts agree. For example, Lidar is the primary sensor for Google's "unmanned" automotive research platform. The hallmark of this technology is the half sphere made up of a laser and a rotating mirror. Lidar is good at sketching the shape of objects and providing distance data—all of which are necessary for target recognition algorithms. Moreover, lidars are also less sensitive to background light.

Ayrapetian cautions, "But Google's lidar sensors cost as much as $100,000." Moreover, when there is low visibility such as fog or snow, the amount of light reflected from the surface of the object carries very little information. It is not very easy to use. Ayrapetian mentioned the very famous California Desert Party. "We turned the self-driving truck to the 'fire man.' What we know is that the lidar does not recognize a large cloud of dust and brick walls."

And radar is a very good complementary technology to a certain extent. If you carefully select the frequency, waveform, and receive signal processing functions, the radar will not be affected by the interference, and will actually work under low visibility and illumination conditions. Christian Schumacher, director of the ADAS business unit at Continental Automotive Systems and Technology, added: "The radar is very good at obtaining distance data. However, radar is not good at identifying objects." Less ideal shape data, and no texture or color information, which makes The object recognition algorithm does not work.

Therefore, we used a camera. With the advent of low-cost, high-resolution cameras, video has become the key sensor technology of ADAS, providing a wealth of data for object recognition. Multi-camera systems are able to circumvent certain visual impairments, providing disparity-based distance information that is limited but sufficient. But the camera also has its problems, and it can't work when the visibility is poor. Schumacher reminded: "Cameras are very demanding on lighting. We need to find a balance point - a system that is very efficient in all driving conditions, but not too many sensors."

Reducing sensors by using better algorithms to complement each other. Ayrapetian said: "We need to consider the sensor's problems and their criticality to customize the sensor. If the demand is narrowed, we can improve the algorithm. We can basically replace the laser radar with a better video stream image processing algorithm."

Sensor fusion

Even with breakthroughs in image processing technology, it is agreed that advanced sensor fusion techniques are still needed to obtain accurate information about the environment around the vehicle from several different types of data: objects, their speed and acceleration, and possible behavior. Wait. An important and complicated question is how many times to process in the system. It is possible to send all the raw data to the fusion engine—for example, the Kalman filter or the deep learning neural network to see what can be drawn. This kind of idea has certain feasibility. For example, there is actually a special kind of network, convolutional network, which is very good for object recognition.

However, designers are more inclined to adopt systems that they understand at the operational level. In a seminar at the Embedded Vision Conference in May, Nathaniel Fairfield, technical director of the Google Autopilot team, said: "Our strategy is to develop a simple system to process sensor data and then fuse it in a more abstract way. Preprocessed data. We prefer to run everything through a large filter."

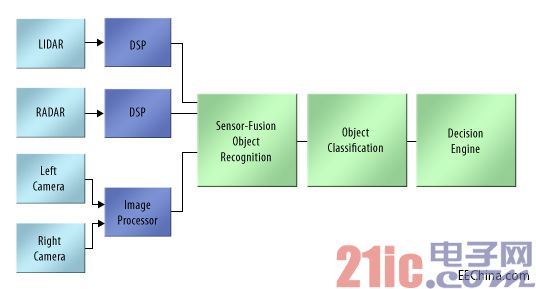

A simplified approach is to logically order the processing steps (Figure 1). Each sensor performs local signal conditioning and may even adapt automatically based on downstream feedback. Each sensor then locally estimates the object based on the information it has, adding some attributes to the inferred objects.

Figure 1. You can think of ADAS as a processor pipeline that looks more and more abstract about the environment around the car.

For example, the lidar can determine that there is an object in the direction of the ground 030, moving from right to left, at a distance of about 20.24 meters. The radar will also report that there may be an object on the ground noise background at the same location. A pair of high-resolution cameras will see the object – it looks very much like a golden retriever, with a direction between 025 and 035, about 15 meters away.

This information is passed to the fusion engine, receiving the main view of the presence of objects, with the most reliable attributes attached—for example, position, speed, size, and color. This information is then sent to a classification engine, which is labeled as a dog—for example, location, speed, size, and color. This information is then sent to a classification engine to mark it as a dog

This seems logical, but it doesn't solve the problem. There is no agreement on which type of algorithm is used at each stage. Schumacher observed: "At the moment, most of the steps are rule-based. However, rule-based systems require a lot of support. We will see some algorithms that use methods that people don't explain well."

Victor Ng-Thow Hing, chief scientist of the Honda North American Institute, agrees. "Deep neural networks will far outweigh people and even exceed rule-based algorithms. Some aspects will negate the rules. I think there should be a mix. method."

Ayrapetian said, "Either way, it's important to realize that it can solve the problem. At present, autonomous vehicles can't keep driving in the lane 100%. In the new environment, the neural network can't work very well. Even Google cars rely on very detailed maps to identify objects and locate themselves. We have not yet reached a very intelligent level to fully understand sensor data."

Strategy classification

The classification engine is not enough for all the problems. If the object is classified successfully, a list of objects is generated, identifying actual attributes such as distance and speed, and classifying according to their possible identities, such as people, green shrubs, or architectural symbols. In the classification process, attributes should also be marked for their importance in the ADAS decision process: very dangerous, navigational clues, or irrelevant backgrounds. The level of uncertainty in the classification should also be evaluated. The classification function should use a variety of different filters, neural networks, and rule-based classification trees to derive its own conclusions by fusing data from different sensors.

Now, the nature of the problem has changed a lot. At least under some better conditions, the ADAS system knows its location and environment in great detail. Now, it must decide what to do next, especially when there are uncertainties. For now, this means a rule-based system.

Ayrapetian explains: “You can use neural networks to identify objects and even place your car in the surrounding environment. However, you need rules to make judgments and explain them.â€

The basic reason for using a rule-based system to determine the response of an ADAS may lie in our emotional factors in how the neural network works. You can train a deep learning network so that it can understand the environment more correctly than people—that is, 99% of the time can correctly understand the video being tested. However, even if there is a 1% misunderstanding of the Golden Retriever, people will not be satisfied: we want a very reliable rule and must not hit a puppy.

A deeper problem is system validation and control compatibility issues. Many engineers feel strongly that before you can trust a design, you must be able to understand how the design works—in fact, before planning the verification strategy. Moreover, compliance with certain rules requires that each component of the design be traceable, going back to the original source of demand. All of these requirements are real problems for neural networks. It is difficult for people to fully understand what is going on at a certain stage of the network, nor can they trace back to certain sections of the system requirements document.

In contrast, rule-based systems are generally very intuitive: you can read a rule and know why you want to use it here. However, rule-based systems are also limited in their implementation. It's hard to design a system that works in an unforeseen environment—requiring a good abstraction and finding rules that work for certain environments. As rules increase, both computational requirements and behavioral predictions can cause problems. For example, it is possible to add rules that look very reasonable, inadvertently, set up an infinite loop on the rule-based decision tree, or create a list of rules that cannot be traversed in real time.

In addition to hybrid neural networks and rules, there is a third factor. Many system designers find it impossible to derive the correct environmental model from sensor data alone, so they require data from the infrastructure: data from fixed sensors on roads and intersections, and data from other vehicles. Ng-Thow Hing observed that “in Japan, the plan is to use intelligent infrastructure to achieve problem tracking.†Road sensors can locate vehicles, measure their speed very accurately, reduce the pressure of object recognition and classification, and thus reduce the judgment unit. Certainty. Data from other vehicles is another key parameter, and it is difficult for in-vehicle sensors to understand the data: where other vehicles are going.

However, infrastructure data requires very good social organization, but also political considerations, as well as inputs that only a few countries can afford. Therefore, most of the world's ADAS designers are still unable to rely on these. Schumacher reminded: "In the end, you still need map information and infrastructure information. We still have time to 'stop over.'"

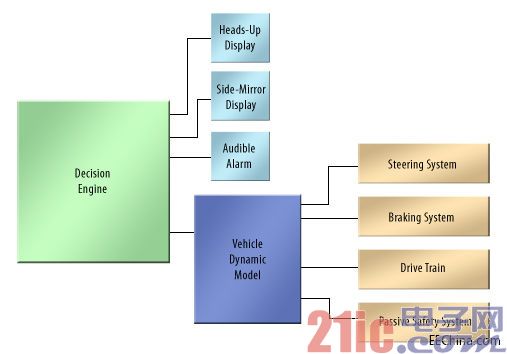

Regardless of which implementation plan is used in the ADAS decision phase, it must produce two types of output (Figure 2). The first type is required, output to the human-machine interface, telling and alerting the driver. The second type of output—only in some designs—is only used in certain environments, directly on the vehicle's main system: steering, braking, transmission, etc. If all of this fails, there is also a passive safety system.

Figure 2. Judge the system with two different types of basic output streams.

Surprisingly, the hottest controversy is the human-machine interface: When should it work and how does it work? Ford's Buczkowski suggested in his DAC keynote that the driver should feel "this car understands I - that's how it works."

This explains the problem: for the driver, ADAS is individual. Schumacher reminded: "If there are too many wrong reactions, or if the system is too cautious, then the driver will turn it off." Ng-Thow Hing agrees: "The complexity is that there are many different driving habits. A cautious driver likes to call the police or even intervene directly. Impulsive drivers are reluctant to interfere; even those that meet safe driving requirements. If they feel that the ADAS system makes them ugly in front of passengers, they will permanently turn off the system.

Schumacher explains: “For example, when the roadkeeping function reminds the driver, the passengers will know it very clearly. If designed to intervene directly, it can be done by controlling the steering system or using different braking torques. One method is better than the other. The method is more effective."

Distracting is also a problem. The human-machine interface must be brought to the attention of the driver and give advice on the correct response. No instructive warnings can be given, which only further distracts the driver's attention – especially in certain emergency situations.

Intervention

If we need more and more auto-driving cars, then ADAS will have more control over the car. This brings up two other design challenges—when and how to control the vehicle.

The first problem is the most difficult of the two. Obviously, the system should not attempt to operate in a current environment that is dangerous or impossible. For example, at 60 kph, ADAS cannot attempt to turn right. This requirement actually means that the judgment unit must have detailed rules to control whether the vehicle control system interface can be used. But considering the variety of vehicle speeds, vehicle directions, and road conditions, rule-based methods are difficult to solve in real-time control systems. ADAS may require a continuous dynamic model of the vehicle so that it can calculate a feasible control input from the desired trajectory. This does not mean a computational load for itself.

The second question is another manifestation of personality problems. Should ADAS always control the car? If the driver's actions conflict with the calculation strategy, should it interfere? Or, should it delay the driver's operation until the last moment of the accident is avoided? If the driver does not listen to the command, then How should ADAS respond? The answer to the last question is environmental foresight, such as drunk driving or dangerous pedestrians, or should it be adjustable or adapted to the driver's personality?

Open bow without turning back

All of these issues represent the value judgment of the designer, and the answers can produce very different results in terms of ADAS system design, cost, and performance. So, how do you judge the quality and personalization of the system when you buy a car?

Ng-Thow Hing reminded: “The quality and performance of cars on the market are mixed. We need very experienced experts to help car buyers understand what they can get.â€

Schumacher is more pessimistic, at least for the US market. He said: "In the United States, simple products are usually successful. In Europe, the situation is completely different. Car buyers will study the data and make comparisons before making a decision."

We know that there are still many unresolved issues in the ADAS system, and there are many different ways to solve them. Different methods have their own strengths in solving basic problems, such as road maintenance, speed management, and collision avoidance. But this will also bring more and more personalization to the ADAS system: the personalization of drivers and passengers.

So, from an open perspective, everything is possible, just a little different in the choice of ADAS design. In fact, there are only three examples of a large number of possible outcomes, each of which has an impact on the driver and the people around him, and ultimately affects safety.

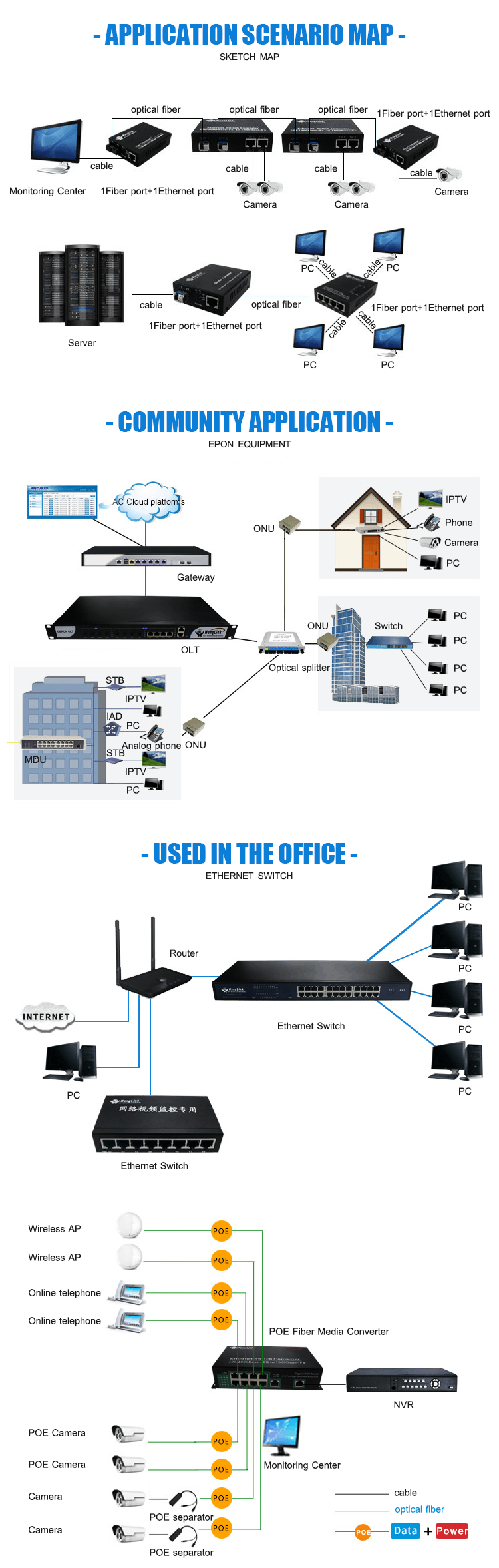

Non-standard POE Switch , providing 1 10/100Mbps Up-link port and 8 10/100Mbps POE ports.It designs with 60W industrial power adapter, each port max output power is 15.4 Watts. Besides, Each port is installed fuse to keep safety. It is passive POE Switch can provide Power for Wireless AP, IP Camera which is nonstandard PD devices. It is widely used in Enterprise,hotel, interlocking institution, Campus network, etc.

Features

1. 8-Port 100M POE Switch with 7 PoE Ports and 1 Uplink Port

2. Built-in power supply

3. Flow Control Type:Full duplex adopts IEEE 802.3x standard, half duplex adopts back pressure standard

Auto-flip ports(Auto MDI/MDIX)

4. Maximum PoE Power: 60W( All PoE ports, that is port 1 to port 4), and each port maximum power is 15.4W

Store-and-forward architecture

5. 8x 10/100 Mbps automatic adjustment RJ45 ports

6. Compatible with IEEE 802.3 10Base-T and IEEE 802.3u 100Base-TX standard

Product Application:

Non-Standard Poe Switch,Poe Switch/4 Port,Port Switch Poe,8 Port Ethernet Network

Guangdong Steady Technology Co.LTD , https://www.steadysmps.com