Issues between memory and other system components often occur at the interface level, making them challenging to detect. This article introduces a specialized testing tool that simplifies identifying and resolving these interface-related problems, ultimately enhancing the reliability of your design.

In the past, signal integrity (SI) testing was widely used by engineers during the design phase to ensure product quality. While SI is valuable in early development stages, its effectiveness diminishes as designs become more complex. As a result, it is gradually being replaced or supplemented by voltage and temperature margin testing. These newer methods are better suited for uncovering subtle issues that may not be visible through traditional SI approaches.

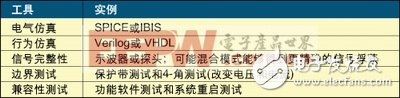

Table 1: Memory design, testing, and verification tools.

Choosing the right tools for memory design, measurement, and verification can significantly reduce engineering time and improve the chances of catching potential issues. Table 1 outlines five key tools essential for memory design. However, this article focuses specifically on tools used for validating functionality and robustness, so not all memory design tools are included here. Table 2 shows when each of these tools is most effective.

5 Stages of Product Development

Debugging without a logic analyzer is nearly impossible for complex designs. However, due to cost and time constraints, logic analyzers are not always the first choice for fault detection. They are typically used for debugging issues identified through compatibility or 4-corner tests. Product development involves five main phases:

Stage 1 - Design

During the initial design phase, there is no physical prototype, so engineers rely solely on simulation tools. This stage requires the use of electrical and behavioral simulations to bring the concept to life.

Stage 2 - Alpha Prototype

The alpha prototype is an early version of the system that may undergo multiple revisions before reaching production. Engineers must conduct thorough testing to ensure that the next prototype is as close to production-ready as possible. Reliable tools play a crucial role at this stage.

The first tools used are startup and software verification, which provide insights into what needs to be adjusted. Due to hardware changes, full software verification may not yet be feasible.

Table 2: Tools to verify memory functionality based on the design phase.

At this stage, SI testing plays a critical role in capturing analog signals from the board. These captures can be compared with device specifications to determine compliance and timing margins. If not compliant, improvements are necessary.

However, marginal testing should not be used at this stage, as it is only effective after the hardware is fully developed. Once tests are completed, engineers make necessary design adjustments, including simulations, to ensure the desired outcome.

Stage 3 - Beta Prototype

By the beta stage, the hardware is nearly complete, with only minor issues remaining. Comprehensive testing ensures the system is ready for production. Software and compatibility tests must be thorough. These tests can be performed independently or in conjunction with margin testing, which should cover a broad range of conditions. Testing under different temperatures and voltage levels helps identify potential memory failures.

At this stage, SI testing has limited value but can still be used to debug functional faults or confirm changes made in the alpha phase. It should not be used to verify signals from unmodified alpha prototypes. Any changes in the beta phase should be verified using electrical or behavioral simulations, or SI testing if needed.

Stage 4 - Production

During production, minimal changes are made to the system. The focus shifts to ensuring consistent product quality, which can last for months or even years. With hundreds or thousands of components involved, maintaining stability is crucial.

Quality tests on components post-production ensure a steady supply, preventing production delays. At this stage, motherboard layout and stitching remain largely unchanged. Since SI testing was used earlier, it is no longer necessary. Additionally, SI testing cannot detect memory-related failures. For stable product quality, compatibility and margin testing are the preferred choices.

Stage 5 - Post-Production

While some systems like MP3 players or DVD recorders don’t require long-term quality control, others such as notebooks and mobile PCs may need memory upgrades or support over several years. In these cases, compatibility and margin testing become essential for maintaining product quality.

Figure 1: Typical signal integrity issues captured on an oscilloscope.

Signal Integrity Testing

Engineers often use oscilloscopes to observe circuit behavior and evaluate signals. Figure 1 shows a typical SI capture. In this example, the signal comes from a single DDR SDRAM component, including address group 1, chip select, and various system clocks.

The SI test process involves capturing the light pattern of a system signal to evaluate voltage changes over time. These captures visually indicate signal violations. This method is resource-intensive and requires high expertise, making it time-consuming.

The SI chart in Figure 1 can reveal issues like ringing, overshoot/undershoot, and clock collisions (slope, setup/hold time, bus contention). If any of these occur, compatibility and margin testing can easily detect system failures. Once identified, other tools like logic analyzers or SI tests can help pinpoint the cause.

Limitations of SI Analysis

SI analysis is becoming increasingly difficult and time-consuming. In FBGA packages, it’s nearly impossible without added probe points. In multi-chip modules (MCMs), signals inside the package are protected, making them inaccessible.

Using detectors to measure SI can alter the signal, introducing new issues. Although active or FET detectors are available, they are becoming less practical due to higher frequencies, especially in point-to-point systems.

For memory quality and stable product performance, SI testing has limitations. Micron conducted extensive internal testing using SI oscilloscopes and found the following:

* SI testing can catch faults and identify major errors early in development.

* It is used to verify board changes.

* After the board design is complete, SI testing has limited value.

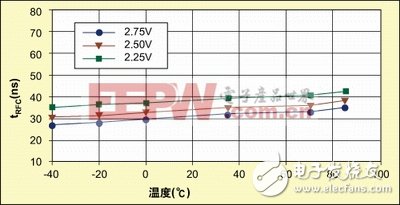

Figure 2: An example of how device specifications vary with voltage and temperature.

Micron's internal test flow has shifted away from SI testing, focusing instead on:

* Compatibility and margin testing to validate systems with memory.

* Using diagnostic tools to isolate failures after compatibility and margin testing identifies an issue.

* If software identifies the fault type (address, row, etc.), the memory chip or module is isolated and tested to replicate the failure.

* If software doesn't provide details, the memory is removed and tested separately.

* Logic analyzers can help identify fault types and violations.

Despite the use of oscilloscopes, experience shows that other tools are more efficient for quick measurements, validation, and debugging. We believe these alternatives allow engineers to troubleshoot and eliminate faults more effectively.

Micron's self-quality control process has concluded the following:

* Regular compatibility and margin testing can expose issues in some systems.

* SI does not find problems that aren’t already detected by memory or system diagnostics.

* SI finds the same faults as other tests, leading to redundant work.

* SI testing is time-consuming, especially when probing a 64-bit data bus and capturing oscilloscope images.

* SI uses expensive equipment like oscilloscopes and probes.

* SI consumes engineering resources, requiring advanced engineers to analyze signal images.

* SI cannot detect all faults; compatibility and margin testing can identify issues that SI misses.

Alternatives to SI Testing

Alternatives to SI testing are used throughout system development, memory quality control, and testing. This section briefly describes these tools and their applications.

Computer systems are ideal for software testing because they can leverage off-the-shelf software, offering a wide range of memory diagnostic tools. When choosing software tools, focus on those that support powerful upgrades and integrate well with new diagnostic methods to uncover unknown failure mechanisms.

Unlike PCs, testing consumer electronics, embedded systems, or networking devices is more challenging. For these applications, engineers often develop custom tools or avoid testing altogether. Creating more robust specialized tools can offer greater benefits than SI testing. Note that in some cases, memory-specific tests like MPEG decoding or network packet transmission may not be feasible in the system, so alternative tools should be used.

Marginal Testing

Marginal testing exposes hidden issues by subjecting the system to extreme conditions. Two important types are voltage and temperature margin testing. These tests stress DRAM and controllers to reveal potential problems. Figure 2 illustrates how system specifications change with temperature.

A common marginal test is the 4-corner test, known for its efficiency in detecting memory issues while balancing test time and resources. For a system operating at minimum and maximum voltages of 3.0V and 3.6V, and temperatures of 0°C and 70°C, the four corners are:

* Corner 1: Maximum voltage, maximum temperature – 3.6V, 70°C

* Corner 2: Maximum voltage, minimum temperature – 3.6V, 0°C

* Corner 3: Minimum voltage, maximum temperature – 3.0V, 70°C

* Corner 4: Minimum voltage, minimum temperature – 3.0V, 0°C

The general approach is to stabilize the system at a specific temperature and voltage, then run tests at that corner. If a fault occurs, it should be analyzed. Another option is the 2-corner test, especially when voltage control is limited by regulators. In such cases, testing at maximum and minimum temperatures is suitable.

Power Cycle Test

The power cycle hardening test repeatedly restarts the system, including cold and warm start tests. A cold start refers to the system transitioning from an inactive state to operation at ambient temperature. A warm start occurs after the system has been running and the internal temperature is stable. Errors can happen during startup, such as voltage spikes or memory initialization. Intermittent issues can only be detected through repeated cycles.

Auto-Refresh Test

DRAM cells leak and require regular refreshes for proper function. To save power, auto-refresh is usually performed when the memory is not in read/write mode. The memory controller must issue the correct commands during entry and exit from auto-refresh; otherwise, data loss may occur. Like the power cycle test, repeating the auto-refresh cycle can help detect intermittent issues. Systems that do not use auto-refresh should avoid this test.

Summary of This Article

System-level issues between memory and other components can be subtle and hard to detect. Using the right tools at the right time allows engineers to quickly identify potential problems and enhance design robustness. Re-evaluating compatibility and margin testing, particularly in memory quality control or validation, reduces development time and provides a more comprehensive view of actual faults. This accelerates the quality control process, especially in stabilizing product quality, which is the primary benefit of these tests.

Enershare's commitment to future-ready energy solutions for smart home innovations, Enershare's Energy Storage Systems create a flexible energy maintenance system for homeowners who want to take more control of their home energy use, it is intended to be used for home battery energy storage and stores electricity for solar self-consumption, load shifting, backup power, and off-the-grid use. you can use it anytime you want-at night or during an outage.

Solar Battery Bank,Solar Energy Storage,Solar House Battery,Solar Battery Storage,Lithium Battery Off Grid,Solar Battery Backup 48v,home battery

Enershare Tech Company Limited , https://www.enersharepower.com